What is Multi-Tenancy ?

- A single instance of the software runs on a server, serving multiple client organizations (tenants)

- Designed to virtually partition its data and configuration

- Essential attribute of Cloud Computing

- Tenant is "my user who has her own users"

- Multi-tenancy is not to the tenant's advantage instead its for the Multi-tenancy provider

Multitenancy can be introduced in either of 2 levels:

- Hypervisor level Isolation

- DB level Isolation

- Maps the physical machine to a virtualized machine

- Hypervisor allows to partition the hardware into finer granularity

- improve the efficiency by having more tenants running on the same physical machine

- Provides cleanest separation

- Less security concerns

- Easier cloud adoption

- virtualization introduces a certain % of overheadvirtualization introduces a certain % of overhead

DB level Isolation

- Re-architect the underlying data layer

- Computing resources and application code shared between all the tenants on a server

- Introduce distributed and partitioned DB

- Degree of isolating is as good as the rewritten query

- Approaches for DB level Isolation:

- Separated Databases

- Shared Database, Separate Schemas

- Shared Database, Shared Schema

- No VM overhead

Types of DB level Isolation:

- Separated Databases

- Shared Database, Separate Schemas

- Shared Database, Shared Schemas

Separated Databases

- Each tenant has its own set of data, isolated from others

- Metadata associates each database with the correct tenant

- Easy to extend the application's data model to meet tenants' individual needs

- Higher costs for hardware & maintaining equipment and backing up tenant data

Shared Database, Separate Schemas

- Multiple tenants in the same database

- Each tenant having its database schema

- Moderate degree of logical data isolation

- Tenant data is harder to restore in the event of a failure

- Appropriate for small number of tables per database

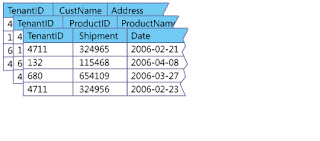

Shared Database, Shared Schemas

- Same database & the same set of tables to host multiple tenants' data

- Introduce an extra attribute "tenantId" in every table

- Append a "where tenantId = $thisTenantId" in every query

- Lowest hardware and backup costs

- Additional development effort in the area of security

- Small number of servers required

Virtualization vs Data Partitioning

| Virtualization | Data Partitioning | |

Type of Implementation

| Simple | Complex |

| Nature |

Multiple instances of the application and database servers on the same hardware as per the number of Tenants

|

Single instance of the application for all the tenants with a shared database schema

|

Architecture Changes

| No | Yes |

| Extension |

Each tenant can have its own extension of the code and database schema

Difficult to Maintain

|

Handling custom extensions for each tenant can be harder to implement.

Easy to Maintain

|

H/W Requirement

| Very High | Very Less |

Cost ( Dev. + Service)

| Very High | Less |

| Multi-tenant | Not 100% | 100% |

| Recommended | No | Yes |